If you’re just starting to deploy multiple large models in Microsoft Power BI, it might be a learning experience, but it’s well worth the effort. This article will explore some insights to give you a head start and help ensure that you’re optimizing query performance.

Large data sizes pose a challenge to Power BI. Why? They take up enormous amounts of memory and can need a long time to refresh. By default, Power BI needs a large amount of memory, thus can be expensive to host.

How do you proceed?

You have three options:

- Deploy to SSAS Tabular

- Use Direct Query

- Use large-model mode in Power BI

Option 1: Deploy to SSAS Tabular

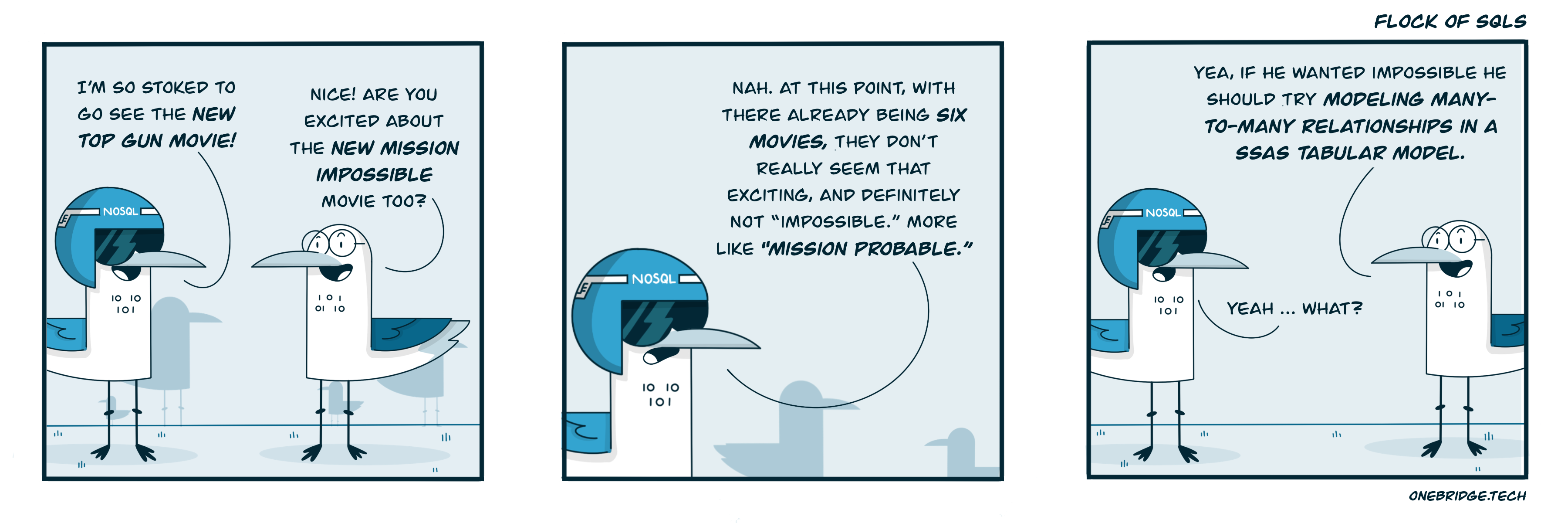

SQL Server Analysis Services (SSAS) and Power BI share a common in memory engine. Therefore, in theory, they can share the same models. SSAS, though currently fully supported by Microsoft, is slowly being sunset in favor of Power BI. Consequently, from a long-term planning perspective, SSAS should not be your first choice to deploy a model.

However, using SSAS Tabular does have some advantages, mostly related to cost. SSAS can be less expensive than Power BI Premium capacity in certain scenarios. SSAS uses SQL Server licensing, so if it can be installed on an existing SQL Server VM, it’s essentially free. It may be cheaper to pay the SQL Server licensing up front rather than pay $5,000 per month indefinitely with Power BI Premium capacity.

Note that SSAS only hosts the engine. If you want to host reports, you will still need a Power BI license.

Option 2: Use Direct Query

Power BI has three storage modes: import, direct query, and dual (combination).

Import makes a copy of the data and stores it compressed in memory. The import/compression process takes time. It also needs enough RAM to live in. In addition, your data is now stale until the next refresh.

DirectQuery skips this by querying the target database directly by using dynamically constructed SQL. This means your data is always fresh and you don’t need to worry about importing data.

The tradeoff is that you lose access to some Power BI functions and DAX measures. You’re also relying on the underlying database to be performant. It takes a very beefy database that has been carefully indexed to make it remotely performant. It can work in theory, but every implementation I’ve seen has had very slow performance.

And finally, there’s dual storage. Dual storage allows you to store some tables in memory and leave others in the database. If Power BI cannot complete an operation with all in memory tables, it will fall back to DirectQuery.

A recently announced feature for dual storage is hybrid tables. They allow some rows in the table to be in memory and others to be DirectQuery.

Option 3: Use Power BI Large Model Mode

We decided to use Large Model Mode when working with a client recently, and it was the right choice. But there are hoops you must jump through to get it to work.

Here is the process:

1. In Power BI Premium, select “Large Model Mode” for models greater than 10GB (in memory compressed).

2. Enable Large Model Mode. This is the easy part. It’s just a couple of button clicks.

3. Enable Incremental Refresh. If you have a large model, you can’t probably afford to do a full refresh of it every day. Incremental refresh allows you to only refresh a portion of a table. If your data can load in under four hours, you are good. If not, you’ll need to perform an advanced refresh.

4. Perform an Advanced Refresh. This is where things start to get ugly. Power BI has a limit of four hours for a refresh. If your data takes longer than that, you’ll need to go through a more complicated process.

- Set up incremental refresh like normal but filter all data out of the table. When deployed Power BI will create a series of partitions that are accessible via SQL Server Management Studio.

- Remove the table filters and do a metadata only deployment. You’ll need to use the ALM Toolkit. You’ll want to update the table definition but not remove the partitions.

- Open up SSMS and start reprocessing the partitions one by one.

I can attest that this process is extremely tedious. Fingers crossed that Microsoft will fix the clunkiness of this functionality in the future, but meanwhile this option gets the job done well, and your hard work will pay off.

Conclusion

If we step back and look at the big picture, the best way to go about choosing the right path to deploying large models in Power BI is to consider your performance needs and the technical capability of your Power BI developers. DirectQuery allows access to enormous amounts of data, but at the cost of query time performance. Large Model Mode allows for much bigger in-memory models, but requires delicate and complicated technical work.

If you need any help, our Onebridge team is full of experts in Power BI. We’re a data and analytics consultancy, so this is our specialty. Just reach out with questions. We’ve even written a book on Power BI to be released later this year by O’Reilly, the highly respected technical publisher.

Want more information? Check out our article Power(ful) BI: How to Get the Most Out of Power BI.